The final problem in my list of ten open problems in computational diversity linguistics touches upon a phenomenon that most linguists, let alone ordinary people, might not have even have heard about. As a result, the phenomenon does not have a real name in linguistics, and this makes it even more difficult to talk about it.

Semantic promiscuity, in brief, refers to the empirical observations that: (1) the words in the lexicon of human languages are often built from already existing words or word parts, and that (2) the words that are frequently "recycled", ie. the words that are promiscuous (similar to the sense of promiscuous domains in biology, see Basu et al. 2008) denote very common concepts.

If this turns out to be true, that the meaning of words decides, at least to some degree, their success in giving rise to new words, then it should be possible to derive a typology of promiscuous concepts, or some kind of cross-linguistic ranking of those concepts that turn out to be the most successful on the long run.

Our problem can (at least for the moment, since we still have problems of completely grasping the phenomenon, as can be seen from the next section) thus be stated as follows:

Assuming a certain pre-selection of concepts that we assume are expressed by as many languages as possible, can we find out which of the concepts in the sample give rise to the largest amount of new words?I am not completely happy with this problem definition, since a concept does not actually give rise to a new word, but instead a concept is expressed by a word that is then used to form a new word; but I have decided to leave the problem in this form for reasons of simplicity.

Background on semantic promiscuity

The basic idea of semantic promiscuity goes back to my time as a PhD student in Düsseldorf. My supervisor then was Hans Geisler, a Romance linguist, with a special interest in sound change and sensory-motor concepts. Sensory-motor concepts are concepts that are thought to be grounded in sensory-motor processes. In concrete, scholars assume that many abstract concepts expressed by many, if not all, languages of the world originate in concepts that denote concrete bodily experience (Ströbel 2016).

Thus, we can "grasp an idea", we can "face consequences", or we can "hold a thought". In such cases we express something that is abstract in nature, but expressed by means of verbs that are originally concrete in their meaning and relate to our bodily experience ("to grasp", "to face", "to hold").

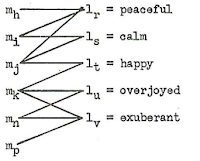

When I later met Hans Geisler in 2016 in Düsseldorf, he presented me with an article that he had recently submitted for an anthology that appeared two years later (Geisler 2018). This article, titled "Sind unsere Wörter von Sinnen?" (approximate translation of this pun would be: "Are our words out of the sense?"), investigates concepts such as "to stand" and "to fall" and their importance for the lexicon of German language. Geisler claims that it is due to the importance of the sensory-motor concepts of "standing" and "falling" that words built from stehen ("to stand") and fallen ("to fall") are among the most productive (or promiscuous) ones in the German lexicon.

|

| Words built from fallen and stehen in German. |

I found (and still find) this idea fascinating, since it may explain (if it turns out to hold true for a larger sample of the world's languages) the structure of a language's lexicon as a consequence of universal experiences shared among all humans.

Geisler did not have a term for the phenomenon at hand. However, I was working at the same time in a lab with biologists (led by Eric Bapteste and Philippe Lopez), who introduced me to the idea of domain promiscuity in biology, during a longer discussion about similar processes between linguistics and biology. In our paper reporting our discussion of these similarities, we proposed that the comparison of word formation processes in linguistics and protein assembly processes in biology could provide fruitful analogies for future investigations (List et al. 2016: 8ff). But we did not (yet) use the term promiscuity in the linguistic domain.

Geisler's idea, that the success of words to be used to form other words in the lexicon of a language may depend on the semantics of the original terms, changed my view on the topic completely, and I began to search for a good term to denote the phenomenon. I did not want to use the term "promiscuity", because of its original meaning.

Linguistics has the term "productive", which is used for particular morphemes that can be easily attached to existing words to form new ones (eg. by turning a verb into a noun, or by turning a noun into an adjective, etc.). However, "productivity" starts from the form and ignores the concepts, while concepts play a crucial role for Geisler's phenomenon.

At some point, I gave up and began to use the term "promiscuity" in lack of a better term, first in a blogpost discussing Geisler's paper (List 2018, available here). Later in 2018, Nathanael E. Schweikhard, a doctoral student in our research group, developed the idea further, using the term semantic promiscuity (Schweikhard 2018, available here), which considers my tenth and last open problem in computational diversity linguistics (at least for 2019).

In the discussions with Schweikhard, which were very fruitful, we also learned that the idea of expansion and attraction of concepts comes close to the idea of semantic promiscuity. This references Blank's (2003) idea that some concepts tend to frequently attract new words to express them (think of concepts underlying taboo, for simplicity), while other concepts tend to give rise to many new words ("head" is a good example, if you think of all the meanings it can have in different concepts),. However, since Blank is interested in the form, while we are interested in the concept, I agree with Schweikhard in sticking with "promiscuity" instead of adopting Blank's term.

Why it is hard to establish a typology of semantic promiscuity

Assuming that certain cross-linguistic tendencies can be found that would confirm the hypothesis of semantic promiscuity, why is it hard to do so? I see three major obstacles here: one related to the data, one related to annotation, and one related to the comparison.

The data problem is a problem of sparseness. For most of the languages for which we have lexical data, the available data are so sparse that we often even have problems to find a list of 200 or more words. I know this well, since we were struggling hard in a phylogenetic study of Sino-Tibetan languages, where we ended up discarding many interesting languages because the sources did not provide enough lexical data to fill in our wordlists (Sagart et al. 2019).

In order to investigate semantic promiscuity, we need substantially more data than we need for phylogenetic studies, since we ultimately want to investigate the structure of word families inside a given language and compare these structures cross-linguistically. It is not clear where to start here, although it is clear that we cannot be exhaustive in linguistics, as biologists can be when sequencing a whole gene or genome. I think that one would need, at least, 1,000 words per language in order to be able to start looking into semantic promiscuity.

The second problem points to the annotation and the analysis that would be needed in order to investigate the phenomenon sufficiently. What Hans Geisler used in his study were larger dictionaries of German that are digitally available and readily annotated. However, for a cross-linguistic study of semantic promiscuity, all of the annotation work of word families would still have to be done from scratch.

Unfortunately, we have also seen that the algorithms for automated morpheme detection that have been proposed today usually fail greatly when it comes to detecting morpheme boundaries. In addition, word families often have a complex structure, and parts of the words shared across other words are not necessarily identical, due to numerous processes involved in word formation. So, a simple algorithm that splits the words into potential morphemes would not be enough. Another algorithm that identifies language-internal cognate morphemes would be needed; and here, again, we are still waiting for convincing approaches to be developed by computational linguists.

The third problem is the comparison itself, reflects the problem of comparing word-family data across different languages. Since every language has its own structure of words and a very individual set of word families, it is not trivial to decide how one should compare annotated word-family data across multiple languages. While one could try to compare words with the same meaning in different languages, it is quite possible that one would miss many potentially interesting patterns, especially since we do not yet know how (and if at all) the idea of promiscuity features across languages.

Traditional approaches

Apart from the work by Geisler (2018), mentioned above, we find some interesting studies on word formation and compounding in which scholars have addressed some similar questions. Thus, Steve Pepper has submitted (as far as I know) his PhD thesis on The Typology and Semantics of Binomial Lexemes (Pepper 2019, draft here), where he looks into the structure of words that are frequently constructed from two nominal parts, such as "windmill", "railway", etc. In her masters thesis titled Body Part Metaphors as a Window to Cognition, Annika Tjuka investigates how terms for objects and landscapes are created with help of terms originally denoting body parts (such as the "foot" of the table, etc., see Tjuka 2019).

Both of these studies touch on the idea of semantic promiscuity, since they try to look at the lexicon from a concept-based perspective, as opposed to a pure form-based one, and they also try to look at patterns that might emerge when looking at more than one language alone. However, given their respective focus (Pepper looking at a specific type of compounds, Tjuka looking at body-part metaphors), they do not address the typology of semantic promiscuity in general, although they provide very interesting evidence showing that lexical semantics plays an important role in word formation.

Computational approaches

The only study that I know of that comes close to studying the idea of semantic promiscuity computationally is by Keller and Schulz (2014). In this study, the authors analyze the distribution of morpheme family sizes in English and German across a time span of 200 years. Using Birth-Death-Innovation Models (explained in more detail in the paper), they try to measure the dynamics underlying the process of word formation. Their general finding (at least for the English and German data analyzed) is that new words tend to be built from those word forms that appear less frequently across other words in a given language. If this holds true, it would mean that speakers tend to avoid words that are already too promiscuous as a basis to coin new words for a given language. What the study definitely shows is that any study of semantic promiscuity has to look at competing explanations.

Initial ideas for improvement

If we accept that the corpus perspective cannot help us to dive deep into the semantics, since semantics cannot be automatically inferred from corpora (at least not yet to a degree that would allow us to compare them afterwards across a sufficient sample of languages), then we need to address the question in smaller steps.

For the time being, the idea that a larger amount of the words in the lexicon of human languages are recycled from words that originally express specific meanings remains a hypothesis (whatever those meanings may be, since the idea of sensory motor concepts is just one suggestion for a potential candidate for a semantic field). There are enough alternative explanations that could drive the formation of new words, be it the frequency of recycled morphemes in a lexicon, as proposed by Keller and Schulz, or other factors that we still not know, or that I do not know, because I have not yet read the relevant literature.

As long as the idea remains a hypothesis, we should first try to find ways to test it. A starting point could consist of the collection of larger wordlists for the languages of the world (eg. more than 300 words per language) which are already morphologically segmented. With such a corpus, one could easily create word families, by checking which morphemes are re-used across words. By comparing the concepts that share a given morpheme, one could try and check to which degree, for example, sensory-motor concepts form clusters with other concepts.

All in all, my idea is far from being concrete; but what seems clear is that we will need to work on larger datasets that offer word lists for a sufficiently large sample of languages in morpheme-segmented form.

Outlook

Whenever I try to think about the problem of semantic promiscuity, asking myself whether it is a real phenomenon or just a myth, and whether a typology in the form of a world-wide ranking is possible after all, I feel that my brain is starting to itch. It feels like there is something that I cannot really grasp (yet, hopefully), and something I haven't really understood.

If the readers of this post feel the same way afterwards, then there are two possibilities as to why you might feel as I do: you could suffer from the same problem that I have whenever I try to get my head around semantics, or you could just have fallen victim of a largely incomprehensible blog post. I hope, of course, that none of you will suffer from anything; and I will be glad for any additional ideas that might help us to understand this matter more properly.

References

Basu, Malay Kumar and Carmel, Liran and Rogozin, Igor B. and Koonin, Eugene V. (2008) Evolution of protein domain promiscuity in eukaryotes. Genome Research 18: 449-461.

Blank, Andreas (1997) Prinzipien des lexikalischen Bedeutungswandels am Beispiel der romanischen Sprachen. Tübingen:Niemeyer.

Geisler, Hans (2018) Sind unsere Wörter von Sinnen? Überlegungen zu den sensomotorischen Grundlagen der Begriffsbildung. In: Kazzazi, Kerstin and Luttermann, Karin and Wahl, Sabine and Fritz, Thomas A. (eds.) Worte über Wörter: Festschrift zu Ehren von Elke Ronneberger-Sibold. Tübingen:Stauffenburg. 131-142.

Keller, Daniela Barbara and Schultz, Jörg (2014) Word formation is aware of morpheme family size. PLoS ONE 9.4: e93978.

List, Johann-Mattis and Pathmanathan, Jananan Sylvestre and Lopez, Philippe and Bapteste, Eric (2016) Unity and disunity in evolutionary sciences: process-based analogies open common research avenues for biology and linguistics. Biology Direct 11.39: 1-17.

List, Johann-Mattis (2018) Von Wortfamilien und promiskuitiven Wörtern [Of word families and promiscuous words]. Von Wörtern und Bäumen 2.10. URL: https://wub.hypotheses.org/464.

Pepper, Steve (2019) The Typology and Semantics of Binominal Lexemes: Noun-noun Compounds and their Functional Equivalents. University of Oslo: Oslo.

Sagart, Laurent and Jacques, Guillaume and Lai, Yunfan and Ryder, Robin and Thouzeau, Valentin and Greenhill, Simon J. and List, Johann-Mattis (2019) Dated language phylogenies shed light on the ancestry of Sino-Tibetan. Proceedings of the National Academy of Science of the United States of America 116: 10317-10322. DOI: https://doi.org/10.1073/pnas.1817972116

Schweikhard, Nathanael E. (2018) Semantic promiscuity as a factor of productivity in word formation. Computer-Assisted Language Comparison in Practice 1.11. URL: https://calc.hypotheses.org/1169.

Ströbel, Liane (2016) Introduction: Sensory-motor concepts: at the crossroad between language & cognition. In: Ströbel, Liane (ed.) Sensory-motor Concepts: at the Crossroad Between Language & Cognition. Düsseldorf University Press, pp. 11-16.

Tjuka, Annika (2019) Body Part Metaphors as a Window to Cognition: a Cross-linguistic Study of Object and Landscape Terms. Humboldt Universität zu Berlin: Berlin. DOI: https://doi.org/10.17613/j95n-c998.